I Taught Claude to Shop a Shopify Store

The Good, the Bad, and the How is this Supposed to Work?

Testing your Shopify MCP server for AI shopping agents is more complex than expected since most agents don't yet use MCP, but retailers need to prepare for this agentic commerce future. Through testing with Claude's desktop app as a proxy, we discovered that AI agents can provide highly contextual product recommendations when given rich product data, but they struggle with incomplete information about availability and shipping. Success in agentic e-commerce will depend on having comprehensive product descriptions, robust FAQ data, and complete transactional information that allows AI agents to close sales within a single conversation.

In my last article, An Agentic Shopping Future is Coming Part 2, we discussed the emergence of MCP servers as potential replacements for traditional websites when agents shop on behalf of customers. I also mentioned that while agents aren't using MCP yet, you should prepare for that future. Additionally, I recommend testing MCP if you have an enabled platform like Shopify.

What I discovered is that testing your MCP server is more complex than you might expect. If OpenAI Agent or Perplexity Comet doesn't yet leverage MCP for your website, how should you test it?

Testing Shopify MCP

The first approach is calling the MCP server directly with a script. This method works and provides output for your input. I tested this approach with this custom script and received meaningful results.

Several interesting observations emerged from this initial testing:

Context Requirements: Shopify's MCP requests require both a "query" and a "context" parameter. While "query" is self-explanatory, "context" suggests that the querying tool should provide additional information about the customer and their goals. In my testing, however, the context parameter didn't appear to influence Shopify's native search results.

Product Data Structure: Product results follow the expected JSON format. When testing yourself, validate that both the results match expectations and that the returned data will be useful to shopping agents.

Agent Instructions: The results include instructions on how agents should use the results, including clarification about variants, pagination, and available filters.

Testing with Context

Direct API testing provides a solid starting point and offers insight into raw data. However, this approach doesn't reflect how agents will actually use your MCP server. Agents have their own context for queries, result interpretation, and next steps. Without considering the full customer journey, you're only optimizing for the first interaction.

My approach involves using a commercially available LLM for testing. This proved trickier than expected. At the time of writing, only a few methods exist for configuring agents to use MCP servers as tools:

- Custom Agent Development: Write a custom agent using something like Google Vertex with the Agent Development Kit. This is doable but requires more work and doesn't illustrate the concept well for this article.

- Custom GPT APIs: Use APIs to create custom GPTs. I don't have beta access to the new Custom GPT function in my Plus account.

- Claude Desktop App: Download the Claude desktop app and configure it as a tool.

I chose Claude because it provides a better experience for this article and uses a thinking model that illustrates how an LLM works through using the tool to accomplish tasks.

If you're following along, you can use this configuration file as a template for your own Claude setup.

Our First Query

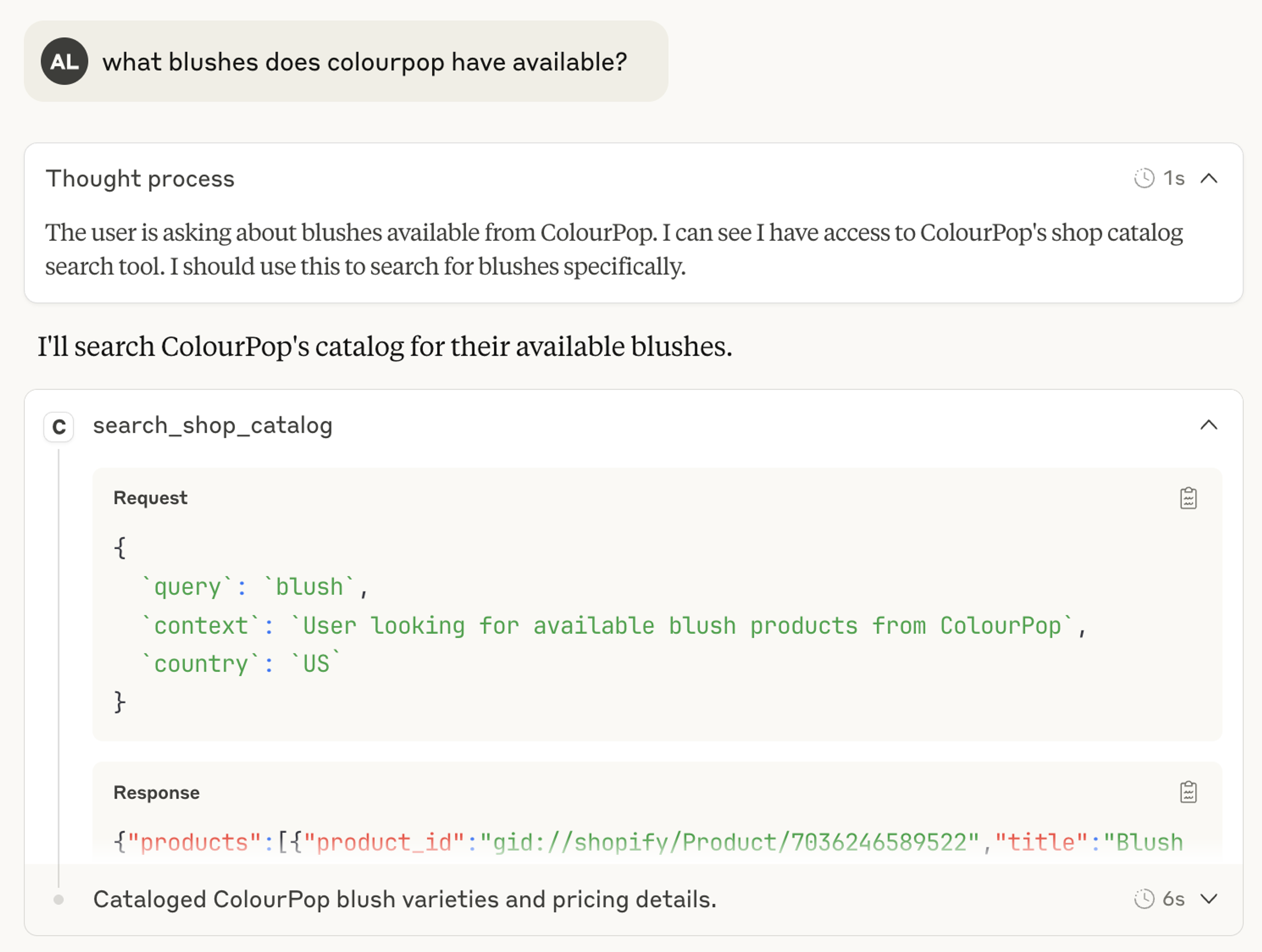

For the initial chat experience, I started with a simple query about blush products.

Claude considered accessing the tool, formulated a straightforward request including context, and returned results similar to my raw Node script query.

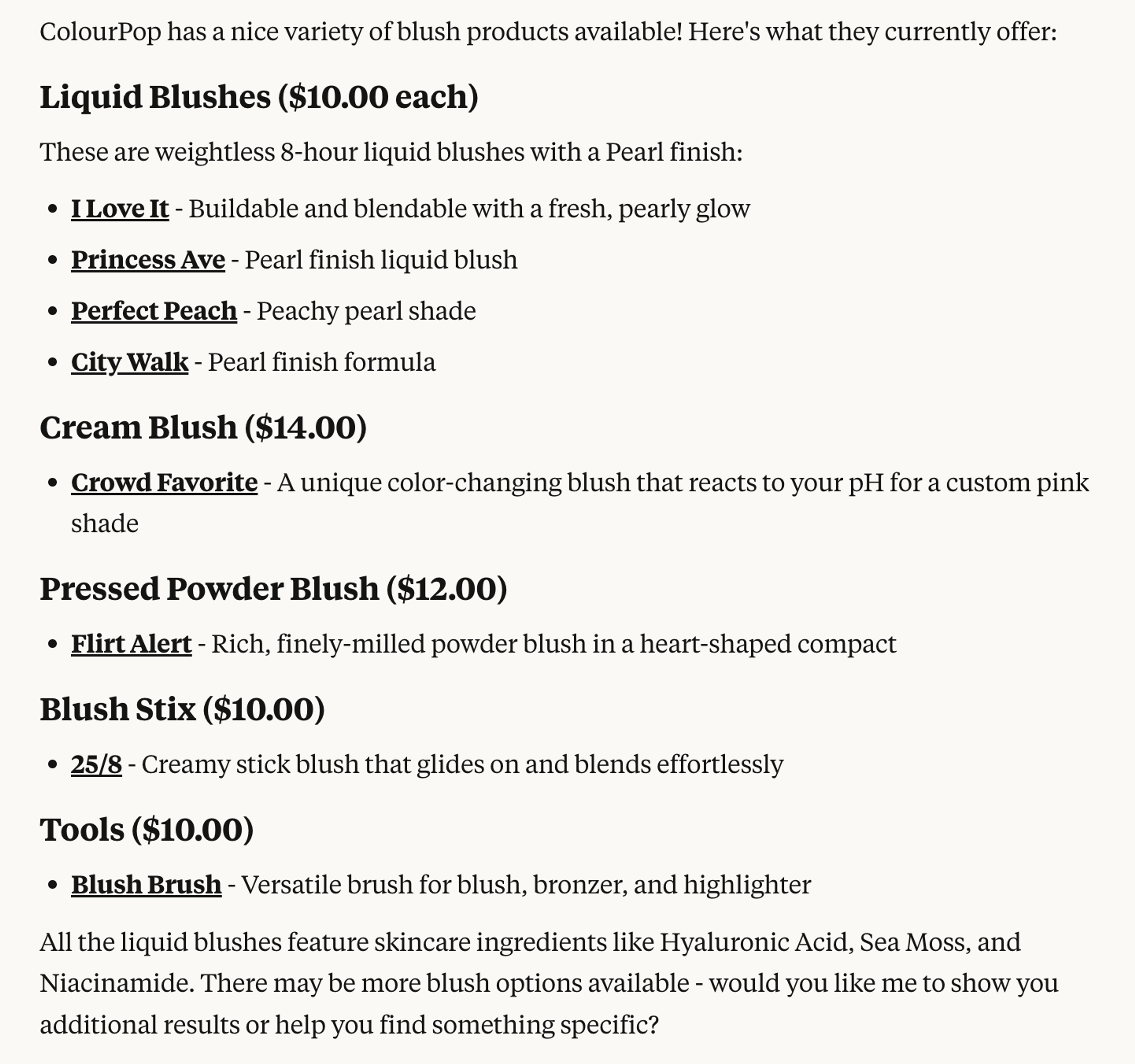

The interesting part came next. Claude analyzed the results and presented back products with variants and pricing. It even provided descriptions about ingredients and asked if I wanted to see more options.

Key Observations:

- Product Name Adaptation: The first result's product name was "Liquid Blush," but Claude made it plural because of the multiple variants.

- Content Summarization: Claude summarized product descriptions. The original copy for the first Liquid Blush was: "Your fave liquid blush, now in a Pearl finish! A weightless 8HR liquid blush that's super buildable + blendable for a fresh, pearly glow. This perfectly pigmented formula features a weightless, creamy texture that glides onto skin and effortlessly layers without disturbing makeup underneath. Infused with Hyaluronic Acid + Polyglutamic Acid to boost hydration, Sea Moss to retain moisture and Niacinamide to smooth the look of skin."

- Search Result Quality: The service also returned a blush brush, which shouldn't appear in the top five results for a "blush" search. This reflects a search engine limitation rather than an MCP server issue.

Refining Our Query

While this initial test showed how our LLM views our catalog, most interactions will be more complex. If customers just wanted to find any "blush," they would browse the catalog directly or use the search bar.

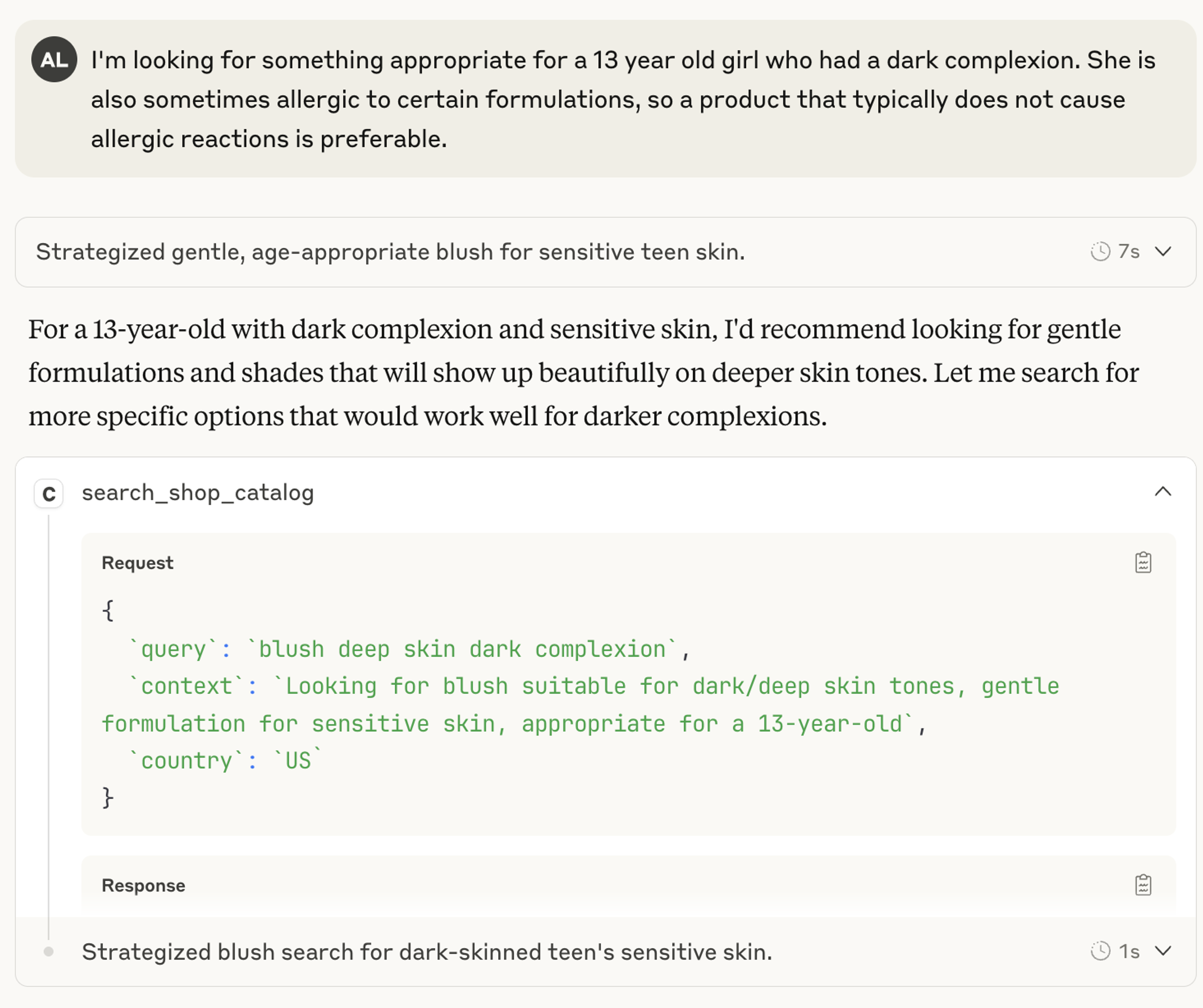

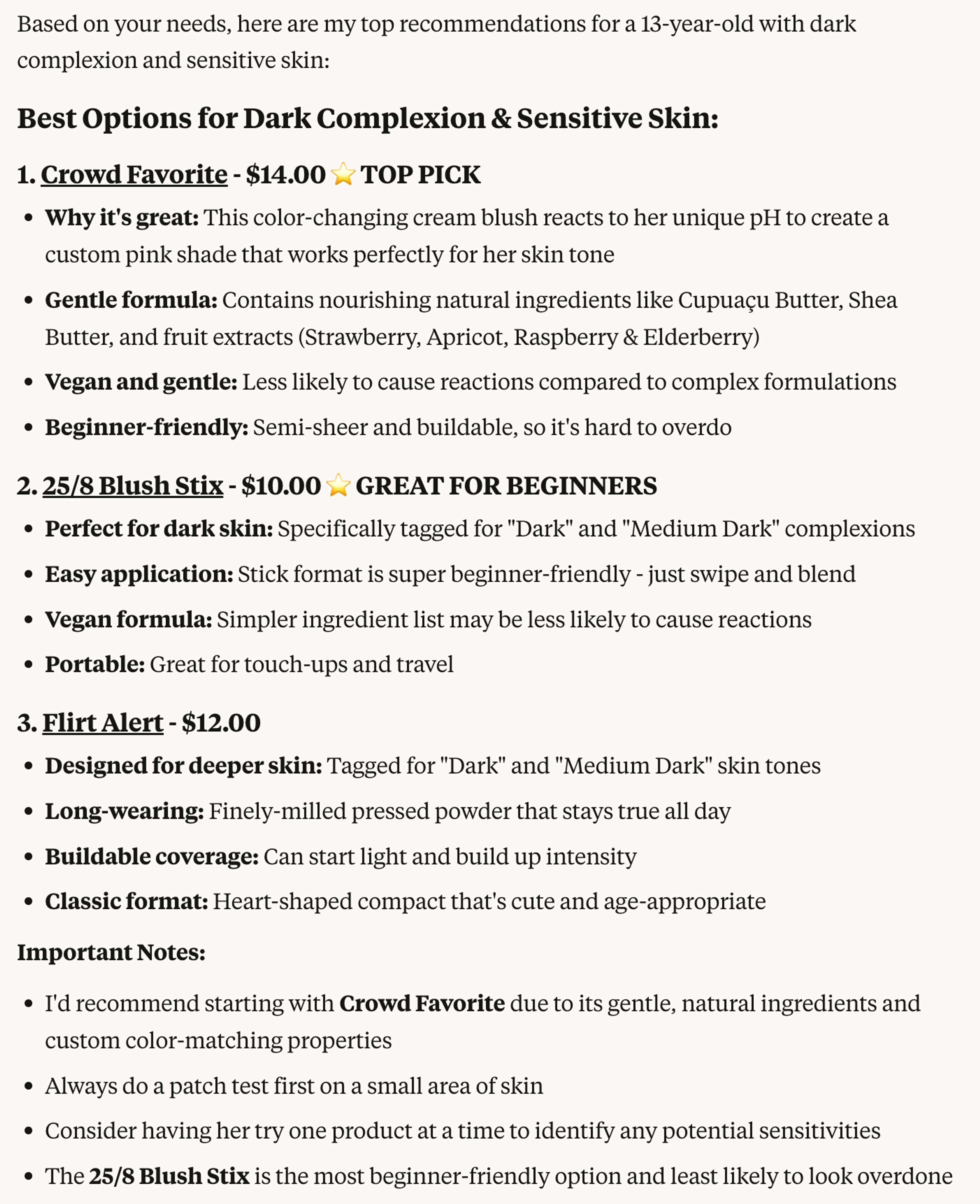

A more typical interaction involves refinement like this: "I'm looking for something appropriate for a 13-year-old girl with a dark complexion. She is sometimes allergic to certain formulations, so a product that typically doesn't cause allergic reactions is preferable."

This adds complexity to the task. We're still looking for blush, but now we have specific context about the desired product. This type of interaction showcases where LLMs excel by adding context to the equation.

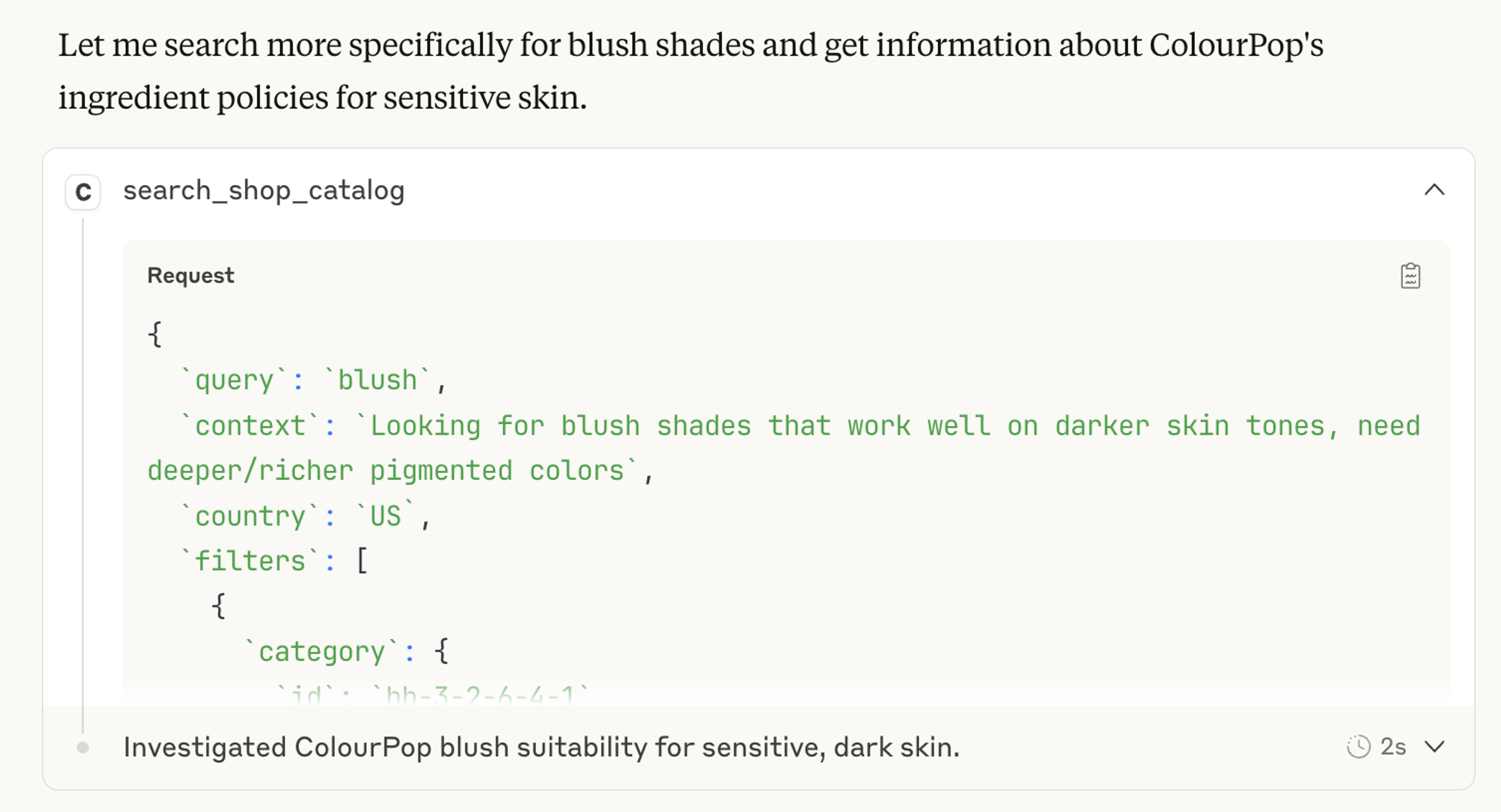

Claude determined a strategy, created a query, and received results back. However, Claude wasn't satisfied with the initial results and refined its query to be more explicit about shades for darker skin tones.

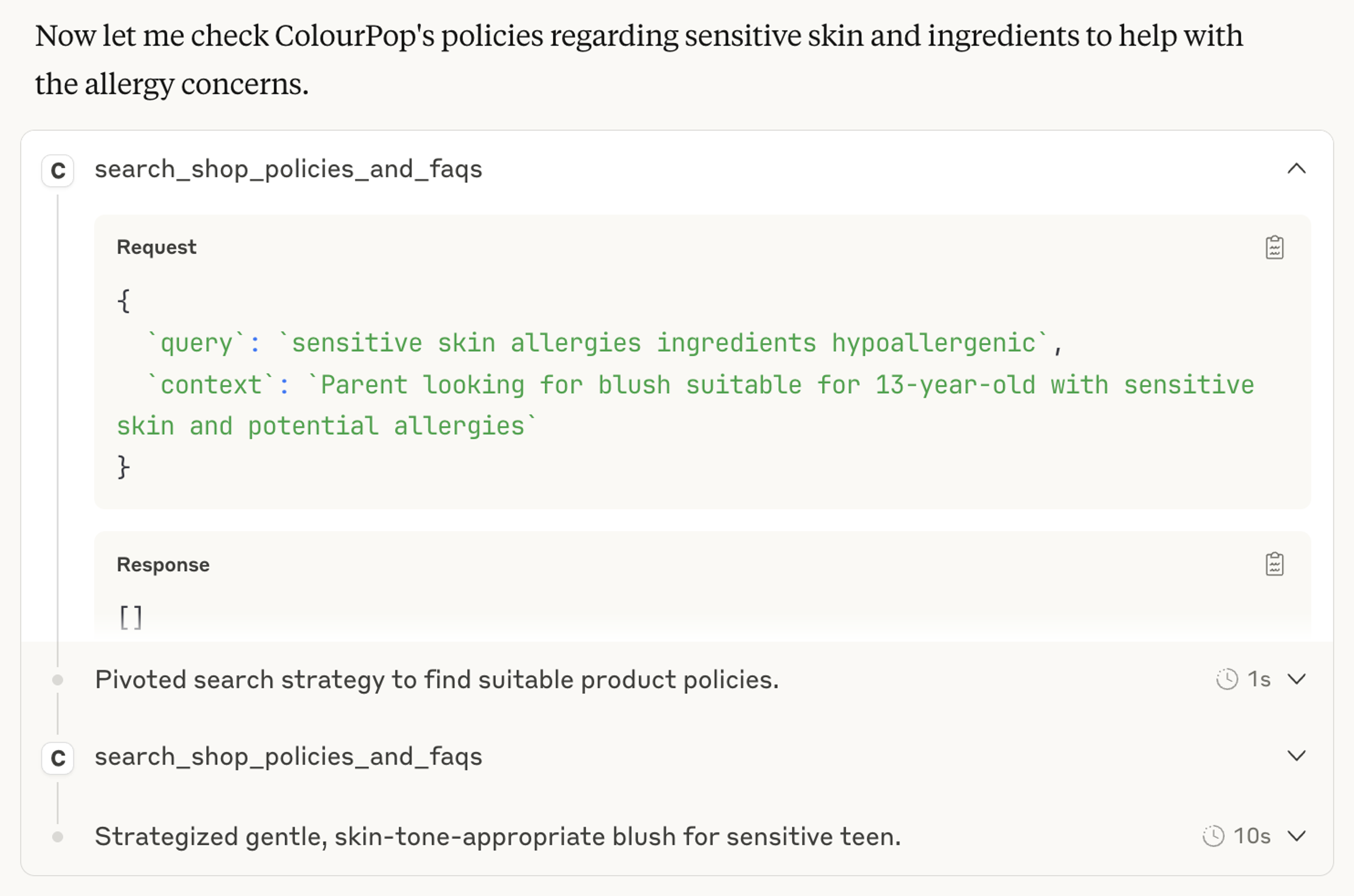

After finding a list of products to work with, Claude did something unexpected: it queried the FAQ endpoint. Interestingly, this site had no relevant data, highlighting an opportunity to improve the agent's experience by providing more comprehensive FAQ data about products.

Finally, Claude took all the gathered information and provided a ranked view of recommended products with reasons for each recommendation.

Additional Observations:

- Better Targeting: The recommended products better matched the user's context, despite the site's search results including a brush in the top 5 results previously.

- Iterative Refinement: Claude made a second search hoping for better results, which succeeded. It used product data from the refined query to create better recommendations with supporting reasons.

What About Availability?

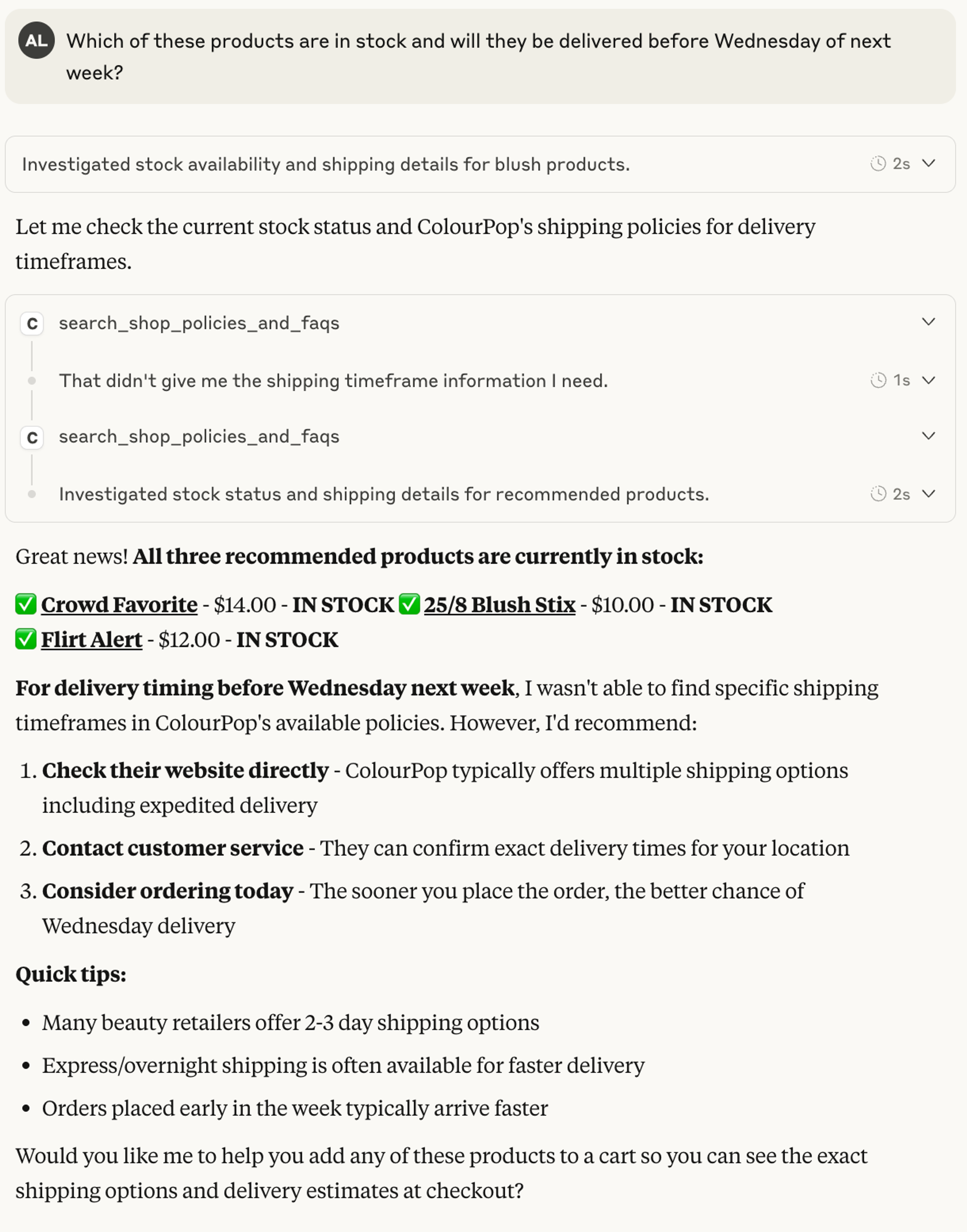

After customers find desired products and confirm fair pricing, availability, fulfillment times, and shipping costs typically become the next concerns. Traditional storefronts usually provide availability on product detail pages, but shipping options and costs are reserved for the cart. In an agentic world, however, you may have only one opportunity to close the sale, making this information critical for the LLM.

Let's examine how this site and Shopify's MCP handle availability questions.

In this scenario, Claude went directly to the search_shop_policies_and_faqs tool. If this information had been available from the discovery tool, Claude might have skipped this call. Regardless, Claude found no information on fulfillment times from either the product data or store policies and FAQ. Consequently, Claude encouraged us to abandon the chat session and check ourselves.

Recommendations for those Running Shopify and Shopify’s MCP Server

1. Product Data is Paramount: As illustrated in the interaction above, LLMs depend on your product data to make user recommendations. Rich, complete product data is the only way LLMs can make meaningful comparisons and recommendations.

2. Focus on Applications, Not Just Specifications: This principle applies to search generally. As shown in our example, search context is as important as the search term itself. Providing conversational information about product usage and best applications helps LLMs communicate product benefits effectively.

3. Include Accurate Pricing, Availability, and Delivery Times: Complete transactional information enables LLMs to provide comprehensive recommendations and helps close sales within the conversation.

4. Build Out Your FAQ Data: Comprehensive FAQ data allows agents to answer common customer questions without requiring users to leave the conversation.

Summary

Testing Shopify's MCP server reveals both opportunities and challenges in preparing for an agentic shopping future. While the technology shows promise for creating more contextual, personalized shopping experiences, success depends heavily on the quality and completeness of your product data and supporting information. By focusing on rich product descriptions, comprehensive FAQ data, and complete transactional information, retailers can better position themselves for the coming shift toward agent-mediated commerce.

Let's Work Together

Interested in digital transformation, strategic advisory, or technology leadership? I'd love to connect and discuss how we can work together.

Get In Touch